We predicted that humanoid robot technology would make great strides in 2023. This proved true, At the end of last year, we published for the world of artificial intelligence in 2023. To keep ourselves honest, with 2023 now coming to a close, let’s revisit these predictions to see how things played out.

There is much to learn from these retrospectives about the state and trajectory of AI today. (Keep an eye out for our 2024 AI predictions, coming out next week!) OpenAI released GPT-4 on March 14, 2023. It was indeed a big deal.

Nine months later, GPT-4 remains the most powerful large language model (LLM) in existence and the yardstick against which every other model is measured. GPT-4 has helped propel OpenAI to annualized revenue of this year, a stunning leap from the company’s reported $28 million of 2022 revenue. In last year’s predictions, we speculated that GPT-4 might be multimodal.

While the initially released version of GPT-4 did not have multimodal capabilities, OpenAI has since announced an updated version named GPT-4V (the V stands for “vision”) that can analyze images as well as text. Expect this trend to continue, with OpenAI’s models becoming increasingly multimodal—incorporating audio, video, music and beyond—as time goes on. We predicted that GPT-4 would be trained on vastly more data than any model that had come before it.

This proved resoundingly true. For context, GPT-3 was trained on roughly 300 billion tokens of data. We wrote last year that GPT-4 would “be trained on a dataset at least an order of magnitude larger than this—perhaps as large as 10 trillion tokens.

” The reality was even more astonishing: GPT-4 was trained on a whopping tokens. (OpenAI hasn’t officially shared details about GPT-4’s training, but information about the model that the AI community has come to view as generally accurate. ) We also conjectured that GPT-4 would not be much larger than GPT-3 in terms of parameter count.

Assessing this prediction requires some explanation and illuminates an important part of GPT-4’s architecture. GPT-3 had ~175 billion parameters. GPT-4 reportedly has ~1.

8 trillion parameters, a far larger number. However, unlike previous GPT models, GPT-4 is a “mixture of experts” model, an innovative architectural choice by the OpenAI team. What this means is that the GPT-4 model is actually made up of several “sub-models” (16, to be exact), with only one or some sub-models in use at any given time.

Each sub-model has ~110 billion parameters, so each one is individually smaller than GPT-3. In last year’s predictions, we noted that there is a finite amount of text data in the world available to train language models (taking into account all the world’s books, news articles, research papers, code, Wikipedia articles, websites, and so on)—and predicted that, as LLM training efforts rapidly scale, we would soon begin to exhaust this finite resource. A year later, we are much closer to running out of the world’s supply of text training data.

As of the end of 2022, the largest known LLM training corpus was the 1. 4 trillion token dataset that DeepMind used to train its Chinchilla model. LLM builders have blown past that mark in 2023.

Meta’s popular Llama 2 model (released in July) was trained on . Alphabet’s PaLM 2 model (released in May) was trained on . As mentioned above, OpenAI’s GPT-4 is to have been trained on 13 trillion tokens.

In October, AI startup Together released an LLM dataset named that contains a stunning 30 trillion tokens—by far the largest such dataset yet created. It is impracticable to determine exactly how many total usable tokens of text data exist in the world. Some had actually pegged the number below 30 trillion.

While the new Together dataset suggests that those estimates were too low, it is clear that we are fast approaching the limits of available text training data. Those at the cutting edge of LLM research are well aware of this problem and are actively working to address it. In a initiative called Data Partnerships, OpenAI has solicited partnerships with organizations around the world in order to gain access to new sources of training data.

In September, Meta announced a new model named Nougat that uses advanced OCR to turn the contents of old scientific books and journals into a more LLM-friendly data format. As AI researcher Jim Fan : “This is the way to unlock the next trillion high-quality tokens, currently frozen in textbook pixels that are not LLM-ready. ” These are clever initiatives to expand the pool of available text training data and stave off the looming data shortage.

But they will only postpone, not resolve, the core dilemma created by insatiably data-hungry models. Two companies, Alphabet’s Waymo and GM’s Cruise, made driverless car services available to members of the public in 2023—just like Uber, but with no one behind the wheel. Many dozens of residents in San Francisco and in Phoenix began to use fully driverless vehicles as their go-to mode of transportation this year: to commute to and from work, to get around on the weekends, to get home after a night out.

The timeline for driverless taxi services to expand beyond these initial markets has, however, become murkier after recent events. Following in October and ensuing regulatory challenges, General Motors has taken Cruise’s entire driverless vehicle fleet off the road, fired Cruise’s CEO, and large budget cuts to its driverless car program for 2024. Cruise had previously announced plans to expand its driverless service to cities including Miami, Austin, Phoenix and Houston; these plans have been shelved.

On the other hand, Waymo continues to its autonomous vehicle fleet in San Francisco and Phoenix. In each city, Waymo passengers now take over 10,000 driverless rides per week. In total in 2023, Waymo has completed over 700,000 driverless rides with passengers.

With Cruise floundering, Waymo appears poised to emerge as the clear leader in this nascent market as we head into 2024. Midjourney managed to resist the siren call of venture capital this year after all. It was not for lack of trying on the part of venture capitalists.

Midjourney, which generates images from text prompts, is one of the hottest generative AI startups in the world. It has tens of millions of users and is on track to do $200 million in revenue in 2023. It has accomplished all of this without raising a cent of outside capital.

Seemingly every venture capitalist in Silicon Valley has tried to court Midjourney CEO/founder David Holz and convince him to take their money. Holz continues to politely refuse them all. Midjourney is run leanly (the company had only 40 employees as of September) and has been profitable since its earliest days, giving Holz the luxury of declining any equity financing.

To quote from a on Midjourney and Holz: “To put it charitably, he doesn’t need VC in his life. ” The dominant paradigm for finding information on the internet has remained largely unchanged for two decades: type a query into Google’s search bar, get back a list of 10 blue links, and click on one to hunt down the information you are looking for. In 2023, a radically new search experience went mainstream: a conversational interface powered by a large language model trained on the entire internet.

The product at the forefront of this paradigm shift is, of course, ChatGPT. ChatGPT is by far the fastest-growing consumer application in history, having reached 100 million users a mere two months after its launch—far faster than TikTok, Instagram, Snapchat or any other product before it. In 2023, for many millions of people, ChatGPT rather than Google became the go-to destination to find information online.

The rapid rise of conversational search this year goes beyond ChatGPT. Google itself has rolled out a chat-based search product called Bard, which has well over 100 million users. Microsoft Bing has launched a similar product.

Younger upstarts with similar offerings including Perplexity and You. com have likewise seen rapid adoption. And internet search is not the only kind of search that is being revolutionized by large language models.

Enterprise search—the way that organizations navigate and retrieve their internal data—is likewise undergoing a profound transformation. A new generation of LLM-powered enterprise search platforms like Glean and Hebbia have seen dramatic revenue growth this year as customers rapidly adopt these more powerful enterprise search experiences. 2023 was the year that humanoid robots began to go mainstream.

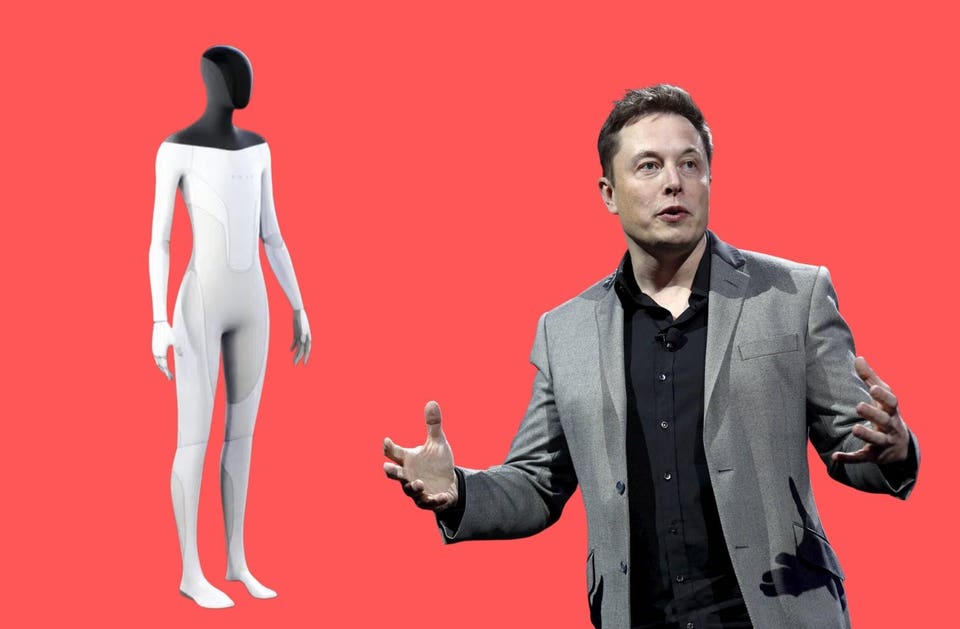

Leading the charge in this area is Tesla, whose humanoid robot effort known as Optimus advanced this year. It is a mistake to underestimate what Tesla can achieve when it devotes its full resources to a task; and it is fully committed to Optimus. In Tesla CEO Elon Musk’s words: “I am surprised that people do not realize the magnitude of the Optimus robot program.

The importance of Optimus will become apparent in the coming years. Those who are insightful or looking, listening carefully, will understand that Optimus will ultimately be worth more than the car business. ” Alongside Tesla, a promising cohort of humanoid robot startups emerged and attracted considerable buzz and funding this year.

1X Technologies a $24 million Series A from OpenAI and Tiger Global in March, then turned around and a $75 to $100 million Series B from SoftBank months later. Figure a $70 million Series A in May and in September released of its robot walking bipedally, a major technical milestone. Vancouver-based Sanctuary AI its next-generation humanoid robot platform in May, which was named to Time Magazine’s of the Top Inventions of 2023.

Some forward-thinking VCs and technology leaders (though still not many) are beginning to pay attention. Vinod Khosla : “The thing nobody talks about is that in 10 years we’ll have a million bipedal robots and in 25 years we’ll have a billion. You’ll buy yours for $10k and it will be as important to your life as your smartphone is now.

” As with autonomous vehicles, the development and deployment of humanoid robots will play out over many years. It will not happen overnight. But the field took a big step forward in 2023.

It is easy to forget that, as of the end of 2022, “LLMOps” was a term that hardly anyone used or was familiar with. The idea of LLMOps has proliferated in 2023. Introductory blog posts on LLMOps have been published by everyone from to to to .

CB Insights recently released an ; numerous VC firms have penned thought pieces on the topic. LLMOps—that is, tooling for large language models—has indeed become an important new category this year as adoption of language models has spread. A wave of buzzy young startups has emerged to fill this market need—from vector database companies like Pinecone and Weaviate to RAG providers like LlamaIndex to RLHF entrants like Adaptive and Surge.

According to Google Scholar, the was cited ~12,800 times in 2023, almost double the figure from 2022 (~6,850). With generative AI vast new opportunities in biology, we expect this momentum to continue in 2024. A few months ago, Google DeepMind and Isomorphic Labs “the next generation of AlphaFold,” a dramatically improved AI system that understands not just proteins but also DNA, RNA, ligands and other biological molecules.

The concept of foundation models for robotics gathered real momentum this year. In June DeepMind announced its model, “a self-improving foundation agent for robotic manipulation,” which is trained on a sufficiently diverse dataset that it can generalize to new tasks and new hardware with little or no fine-tuning. A month later, another Google team published (RT-2), a “vision-language-action model” that combines web-scale knowledge (like that possessed by GPT-4) with the ability to take action in the real world.

For example, when presented with a toy horse and a toy octopus and instructed to “pick up the land animal,” the robot will pick up the horse even if it has never seen this example before. OpenAI, on the other hand, published no work on robotics this year. The organization the strategic decision a few years ago to step back from robotics research; that decision appears not to have changed this year.

Don’t be surprised, however, to see OpenAI ramp up its activity in this field again before long. On the startup side, a handful of companies including is likewise pushing forward the state of the art in robotics foundation models. Foundation models for robotics will lag foundation models for language by many years.

Their ultimate impact, though, may prove even larger. The semiconductor manufacturing supply chain and the have only become more hot-button topics in 2023. But compared to 2022, when several massive investment commitments were made to build semiconductor manufacturing facilities on U.

S. soil ($40 billion in Arizona, up to $100 billion in New York, $30 billion in Arizona, another $20 billion in Ohio), very few such net new commitments were made in 2023. Perhaps the most notable 2023 commitment came last week, when Amkor Technology it would invest $2 billion to build a new semiconductor packaging facility in Arizona.

Despite the shortage of new commitments this year, make no mistake: bringing semiconductor production to U. S. soil remains a major long-term priority for the U.

S. government and an important dynamic to keep an eye on going forward. .

From: forbes

URL: https://www.forbes.com/sites/robtoews/2023/12/10/how-accurate-were-our-2023-ai-predictions/