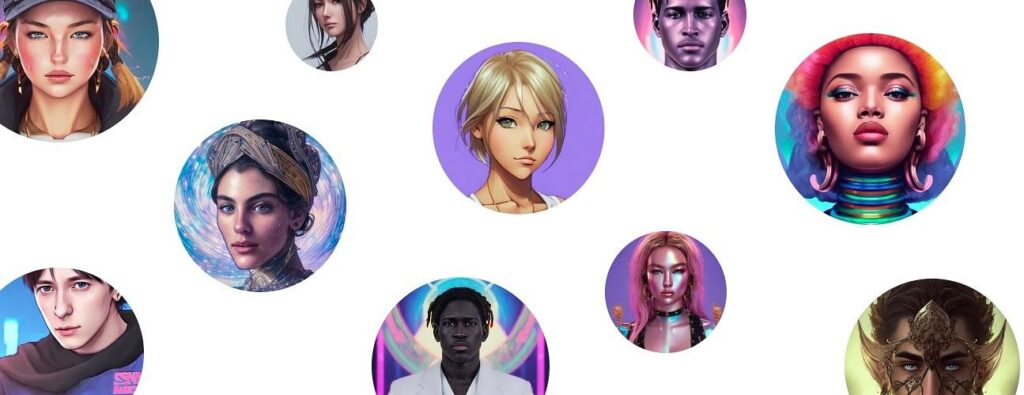

Lensa AI has been making the rounds on social media, with users posting their colourful, AI-generated selfies made on this app. It also became the No. 1 app for photo and video apps on Apple’s charts, but it has received backlash from artists and tech experts.

One of the major reasons for the backlash is that the app can be used to generate non-consensual soft porn. There are quite a few pictures that can be seen on Twitter, with breasts and nipples clearly visible in the images. Additionally some images also have faces of recognisable people.

Editing Photoshopped imagesTo check this TechCrunch tested the app by using photoshopped images. The result of this was that the AI took these images and disabled the not safe for work (NSFW) filter. The app is taking photoshop images and recreating the images.

Which means that if any user is willing to work and create a handful of fake images then Lensa AI will churn out a number of images that may be problematic. Meta to verify user age on Facebook Dating by using AI face scanningIn a response to this test Prisma Lab’s CEO and co-founder Andrey Usoltsev told TechCrunch that the app will give out this result only if it is intentionally provoked into creating NSFW content and the app will not produce such images accidentally. The Stability AI model has adaptations of Stable Diffusion model software which should make it harder for users to generate nude images, but these adaptations can be outmaneuvered by any tech savvy user.

He also added, “To enhance the work of Lensa, we are in the process of building the NSFW filter. It will effectively blur any images detected as such. It will remain at the user’s sole discretion if they wish to open or save such imagery.

”To those who don’t know, Stable diffusion means that the AI has been trained using multiple images or art pieces from the internet. This means that the AI can be trained. Which means that if a person is bent on doing illegal or harmful work then they will be able to find an alternative way to retrain the AI.

Medical studies modernised with latest tech, AI, machinesModelled on styles from other artistsThe second issue is that the filters are modelled after the styles of other artists, which raises questions about the integrity and ethics of the filters. Many artists have claimed that the app is generating art using stolen assets. Have had to unfollow many people this week because they insisted on using Lensa app or something similar and posted it as their pfp.

It’s been really disappointing to see so many people refuse to see why generating art using stolen assets is wrong and bad actually. — Kim Mihok (@hapicatART) December 6, 2022 why are you all using that Lensa thing. it’s clearly stealing from actual artists lol— 43 year old complaining about morning people (@rachelmillman) December 3, 2022 Seeing a Lensa portrait where what was clearly once an artist’s signature is visible in the bottom right and people are still trying to argue it isn’t theft.

— Lauryn Ipsum (@LaurynIpsum) December 6, 2022 There are also questions regarding how long the app will store the photos and whether other users may also use the photos inappropriately. What is Lensa AI?The app developed by Prisma Labs Inc enhances the photos by taking out the so-called imperfections in the photos using different tools. It also allows users to replace or blur backgrounds, apply art filters, add borders and special effects.

The app transforms images into various art styles like cartoons, sketches, watercolors or animes. The app can be downloaded on both Apple and Android app stores. In India the app is charging a subscription fee of Rs 2,499 with a free trial for the first week.

.

From: freepressjournal

URL: https://www.freepressjournal.in/business/lensa-ai-self-portrait-app-can-be-provoked-to-generate-non-consensual-soft-porn