BIG THINGS HAPPEN when computers get smaller. Or faster. And quantum computing is about chasing perhaps the biggest performance boost in the history of technology.

The basic idea is to smash some barriers that limit the speed of existing computers by harnessing the counterintuitive physics of subatomic scales. If the tech industry pulls off that, ahem, quantum leap , you won’t be getting a quantum computer for your pocket, so don’t start saving for an iPhone Q. Quantum computers won’t replace conventional computers.

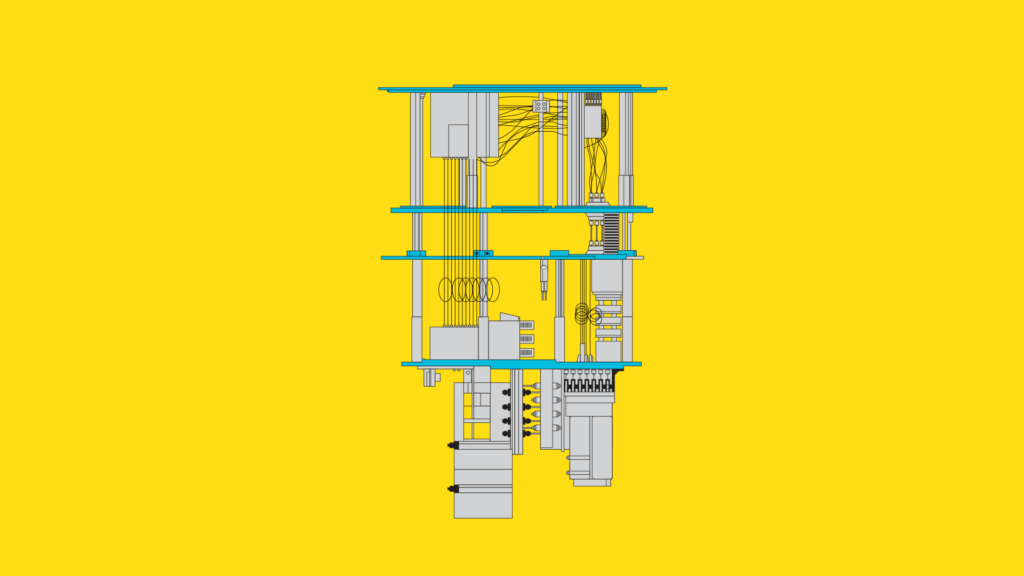

Instead, many experts envision the quantum computer as a specialized chip, part of a conventional supercomputer accessed via the cloud. For problems suited to specific algorithms where quantum calculations offer advantages, that system would tap its quantum accelerator chip. Through these promised speedups, quantum computers could help advance many areas of science and technology, from longer-lasting batteries for electric cars to new medical treatments.

It’s not productive (or polite) to ask people working on quantum computing when exactly those dreamy applications will become real. The only thing for sure is that they are still many years away. Researchers have yet to make prototype quantum hardware do anything practical, although they have demonstrated prototype machines that can solve a commercially useless math puzzle faster than a state-of-the-art supercomputer.

But powerful—and, for tech companies, profit-boosting—computers powered by quantum physics have recently started to feel less hypothetical. That’s because Google, IBM, and others have decided it’s time to invest heavily in the technology. This, in turn, has helped quantum computing earn a bullet point on the corporate-strategy PowerPoint decks of big companies in areas ranging from finance, like JPMorgan , to aerospace, like Airbus.

In 2022, venture capitalists plowed a record $1. 8 billion into companies working on quantum computing hardware or software worldwide, according to data from Pitchbook. That’s nearly five times the amount invested in 2019.

Like the befuddling math underpinning quantum computing, some of the expectations building around this still-impractical technology can make you lightheaded. If you squint out the window of a flight into SFO right now, you can see a haze of quantum hype drifting over Silicon Valley. But the enormous potential of quantum computing is undeniable, and the hardware needed to harness it is advancing fast.

If there were ever a perfect time to bend your brain around quantum computing, it’s now. Say “Schrödinger’s superposition” three times fast, and we can dive in. The prehistory of quantum computing begins early in the 20th century, when physicists began to sense they had lost their grip on reality.

Physicist Paul Benioff suggests quantum mechanics could be used for computation. Nobel-winning physicist Richard Feynman , at Caltech, coins the term quantum computer . Physicist David Deutsch , at Oxford, maps out how a quantum computer would operate, a blueprint that underpins the nascent industry of today.

Mathematician Peter Shor, at Bell Labs, writes an algorithm that could tap a quantum computer’s power to break widely used forms of encryption . Barbara Terhal and David DiVincenzo, two physicists working at IBM, develop theoretical proofs showing that quantum computers can solve certain math puzzles faster than classical computers. Google starts its new quantum hardware lab and hires the professor behind some of the best quantum computer hardware yet to lead the effort .

Google hires the professor behind some of the best quantum computer hardware yet to lead its new quantum hardware lab . IBM puts some of its prototype quantum processors on the internet for anyone to experiment with, saying programmers need to get ready to write quantum code. Google’s quantum computer beats a classical supercomputer at a commercially useless task based on Terhal and DiVincenzo’s 2004 proofs, in a feat many call “quantum advantage.

” The University of New South Wales in Australia offers the first undergraduate degree in quantum engineering to train a workforce for the budding industry. First, accepted explanations of the subatomic world turned out to be incomplete. Electrons and other particles didn’t just neatly carom around like Newtonian billiard balls, for example.

Sometimes they acted like a wave instead. Quantum mechanics emerged to explain such quirks, but introduced troubling questions of its own. To take just one brow-wrinkling example, this new math implied that physical properties of the subatomic world, like the position of an electron, existed as probabilities before they were observed.

Before you measure an electron’s location, it is neither here nor there, but some probability of everywhere. You can think of it like a quarter flipping in the air. Before it lands, the quarter is neither heads nor tails, but some probability of both.

If you find that baffling, you’re in good company. A year before winning a Nobel Prize for his contributions to quantum theory, Caltech’s Richard Feynman remarked that “nobody understands quantum mechanics. ” The way we experience the world just isn’t compatible.

But some people grasped it well enough to redefine our understanding of the universe. And in the 1980s, a few of them—including Feynman—began to wonder whether quantum phenomena like subatomic particles’ probabilistic existence could be used to process information. The basic theory or blueprint for quantum computers that took shape in the ’80s and ’90s still guides Google and other companies working on the technology.

Before we belly flop into the murky shallows of quantum computing 0. 101, we should refresh our understanding of regular old computers. As you know, smartwatches, iPhones , and the world’s fastest supercomputer all basically do the same thing: They perform calculations by encoding information as digital bits, aka 0s and 1s.

A computer might flip the voltage in a circuit on and off to represent 1s and 0s, for example. Quantum computers do calculations using bits, too. After all, we want them to plug into our existing data and computers.

But quantum bits, or qubits, have unique and powerful properties that allow a group of them to do much more than an equivalent number of conventional bits. Qubits can be built in various ways, but they all represent digital 0s and 1s using the quantum properties of something that can be controlled electronically. Popular examples—at least among a very select slice of humanity—include superconducting circuits, or individual atoms levitated inside electromagnetic fields.

The magic power of quantum computing is that this arrangement lets qubits do more than just flip between 0 and 1. Treat them right and they can flip into a mysterious extra mode called a superposition. You may have heard that a qubit in superposition is both 0 and 1 at the same time.

That’s not quite true and also not quite false. The qubit in superposition has some probability of being 1 or 0, but it represents neither state, just like our quarter flipping into the air is neither heads nor tails, but some probability of both. In the simplified and, dare we say, perfect world of this explainer, the important thing to know is that the math of a superposition describes the probability of discovering either a 0 or 1 when a qubit is read out.

The operation of reading a qubit’s value crashes it out of a mix of probabilities into a single clear-cut state, analogous to the quarter landing on the table with one side definitively up. A quantum computer can use a collection of qubits in superpositions to play with different possible paths through a calculation. If done correctly, the pointers to incorrect paths cancel out, leaving the correct answer when the qubits are read out as 0s and 1s.

A device that uses quantum mechanical effects to represent 0s and 1s of digital data, similar to the bits in a conventional computer. Qubits come in different designs. Some take the form of on circuits made of superconducting material.

Others are devices that control individual atoms, individual charged atoms known as ions, or single photons. Qubits don’t hold on to information perfectly because of interactions with the environment. In state-of-the-art hardware, this results in a computing error around once in every 1,000 operations.

The dream algorithms of the field require an error rate of about one in a billion operations. Researchers have developed codes to correct these errors, but they require a lot of computational power to implement. In the meantime, quantum computing experts are investigating how machines without error checking might still be useful.

It’s the trick that makes quantum computers tick, and makes qubits more powerful than ordinary bits. A superposition is a mathematical combination of both 0 and 1. Quantum algorithms can use a group of qubits in a superposition to shortcut through calculations.

A quantum effect so unintuitive that Einstein dubbed it “spooky action at a distance. ” When two qubits in a superposition are entangled, certain operations on one have instant effects on the other, a process that helps quantum algorithms be more powerful than conventional ones. The term refers to a quantum computer capable of performing a task—useful or not—faster than a state-of-the-art supercomputer.

Also sometimes known as “quantum supremacy” or “quantum computational advantage,” the term is a moving target as researchers continue to improve classical algorithms. For some problems that are very time-consuming for conventional computers, this allows a quantum computer to find a solution in far fewer steps than a conventional computer would need. Grover’s algorithm, a famous quantum search algorithm, could find you in a phone book of 100 million names with just 10,000 operations.

If a classical search algorithm just spooled through all the listings to find you, it would require 50 million operations, on average. For Grover’s and some other quantum algorithms, the bigger the initial problem—or phone book—the further behind a conventional computer is left in the digital dust. The reason we don’t have useful quantum computers today is that qubits are extremely finicky.

The quantum effects they must control are very delicate, and stray heat or noise can flip 0s and 1s or wipe out a crucial superposition. Qubits have to be carefully shielded, and operated at very cold temperatures—sometimes only fractions of a degree above absolute zero. A major area of research involves developing algorithms for a quantum computer to correct its own errors, caused by glitching qubits.

So far, it has been difficult to implement these algorithms because they require so much of the quantum processor’s power that little or nothing is left to crunch problems. Some researchers, most notably at Microsoft, hope to sidestep this challenge by developing a type of qubit out of clusters of electrons known as a topological qubit . Physicists predict topological qubits to be more robust to environmental noise and thus less error-prone, but so far they’ve struggled to make even one.

After announcing a hardware breakthrough in 2018, Microsoft researchers retracted their work in 2021 after other scientists uncovered experimental errors. Still, companies have demonstrated promising capability with their limited machines. In 2019, Google used a 53-qubit quantum computer to generate numbers that follow a specific mathematical pattern faster than a supercomputer could.

The demonstration kicked off a series of so-called “quantum advantage” experiments, which saw an academic group in China announcing their own demonstration in 2020 and Canadian startup Xanadu announcing theirs in 2022. (Although long known as “quantum supremacy” experiments, many researchers have opted to change the name to avoid echoing “white supremacy. ”) Researchers have been challenging each quantum advantage claim by developing better classical algorithms that allow conventional computers to work on problems more quickly, in a race that propels both quantum and classical computing forward.

Meanwhile, researchers have successfully simulated small molecules using a few qubits. These simulations don’t yet do anything beyond the reach of classical computers, but they might if they were scaled up, potentially helping the discovery of new chemicals and materials. While none of these demonstrations directly offer commercial value yet, they have bolstered confidence and investment in quantum computing.

After having tantalized computer scientists for 30 years, practical quantum computing may not exactly be close, but it has begun to feel a lot closer. Error-prone but better than supercomputers at a cherry-picked task, quantum computers have entered their adolescence. It’s not clear how long this awkward phase will last, and like human puberty it can sometimes feel like it will go on forever.

Researchers in the field broadly describe today’s technology as Noisy Intermediate-Scale Quantum computers, putting the field in the NISQ era (if you want to be popular at parties, know that it’s pronounced “nisk”). Existing quantum computers are too small and unreliable to execute the field’s dream algorithms, such as Shor’s algorithm for factoring numbers. The question remains whether researchers can wrangle their gawky teenage NISQ machines into doing something useful.

Teams in both the public and private sector are betting so, as Google, IBM, Intel, and Microsoft have all expanded their teams working on the technology, with a growing swarm of startups such as Xanadu and QuEra in hot pursuit. The US, China, and the European Union each have new programs measured in the billions of dollars to stimulate quantum R&D. Some startups, such as Rigetti and IonQ, have even begun trading publicly on the stock market by merging with a so-called special-purpose acquisition company, or SPAC—a trick to quickly gain access to cash.

Their values have since plummeted , in some cases by much more than the pandemic correction seen more broadly across tech companies. It’s not quite clear what the first killer apps of quantum computing will be, or when they will appear. But there’s a sense that whichever company is first to make these machines useful will gain big economic and national security advantages.

Chemistry simulations may be the first practical use for these prototype machines, as researchers are figuring out how to make their qubits interact like electrons in a molecule. Computer models of molecules and atoms are vital to the hunt for new drugs or materials. Yet conventional computers can’t accurately simulate the behavior of atoms and electrons during chemical reactions.

Why? Because that behavior is driven by quantum mechanics, the full complexity of which is too great for conventional machines. Daimler and Volkswagen have both started investigating quantum computing as a way to improve battery chemistry for electric vehicles. Microsoft says other uses could include designing new catalysts to make industrial processes less energy intensive, or even pulling carbon dioxide out of the atmosphere to mitigate climate change.

Cryptography researchers have also begun preparing for quantum computers’ code-breaking capabilities. We’ve known since the ’90s that they could zip through the math underpinning the encryption that secures online banking, flirting, and shopping. Quantum processors would need to be much more advanced to do this, but governments and companies want to be ready.

The US National Institute of Standards and Technology is in the process of evaluating new encryption systems that could be rolled out to quantum-proof the internet. Tech companies like Google are also betting that quantum computers can make artificial intelligence more powerful . That’s further in the future and less well mapped out than chemistry or code-breaking applications, but researchers argue they can figure out the details down the line as they play around with larger quantum processors.

One hope is that quantum computers could help machine-learning algorithms pick up complex tasks using fewer than the millions of examples typically used to train AI systems today. Despite all the superposition-like uncertainty about when the quantum computing era will really begin, Big Tech companies argue that programmers need to get ready now. Google, IBM, and Microsoft have all released open source tools to help coders familiarize themselves with writing programs for quantum hardware.

IBM offers online access to some of its quantum processors, so anyone can experiment with them. Long term, the big computing companies see themselves making money by charging corporations to access data centers packed with supercooled quantum processors. Launched in 2019, Amazon Web Services offers a service that connects users to startup-built quantum computers made of various qubit types over the cloud.

Governments and universities are also working to train a quantum-literate workforce. In 2020, the US government launched an initiative to develop a K-12 curriculum relating to quantum computing (it’s called Q-12, geddit?). That same year, the University of New South Wales in Australia offered the world’s first bachelor’s degree in quantum engineering.

What’s in it for the rest of us? Despite some definite drawbacks, the age of conventional computers has helped make life safer, richer, and more convenient—many of us are never more than five seconds away from a kitten video. The era of quantum computers should have similarly broad-reaching, beneficial, and impossible-to-predict consequences. Bring on the qubits.

This guide was last updated on February 22, 2023. Enjoyed this deep dive? Check out more WIRED Guides . .

From: wired

URL: https://www.wired.com/story/wired-guide-to-quantum-computing/