Teenagers deserve to grow, develop, and experiment, says Caitriona Fitzgerald, deputy director at the Electronic Privacy Information Center (EPIC), a nonprofit advocacy group. They should be able to test or abandon ideas “while being free from the chilling effects of being watched or having information from their youth used against them later when they apply to college or apply for a job. ” She called for the Federal Trade Commission (FTC) to make rules to protect the digital privacy of teens.

Hye Jung Han, the author of a Human Rights Watch report about education companies selling personal information to data brokers, wants a ban on personal data-fueled advertising to children. “Commercial interests and surveillance should never override a child’s best interests or their fundamental rights, because children are priceless, not products,” she said. Han and Fitzgerald were among about 80 people who spoke at the first public forum run by the FTC to discuss whether it should adopt new rules to regulate personal data collection, and the AI fueled by that data.

The FTC is seeking the public’s help to answer questions about how to regulate commercial surveillance and AI. Among those questions is whether to extend the definition of discrimination beyond traditional measures like race, gender, or disability to include teenagers, rural communities, homeless people, or people who speak English as a second language. The FTC is also considering whether to ban or limit certain practices, restrict the period of time companies can retain consumer data, or adopt measures previously subscribed by congressional lawmakers , like audits of automated decision-making systems to verify accuracy, reliability, and error rates.

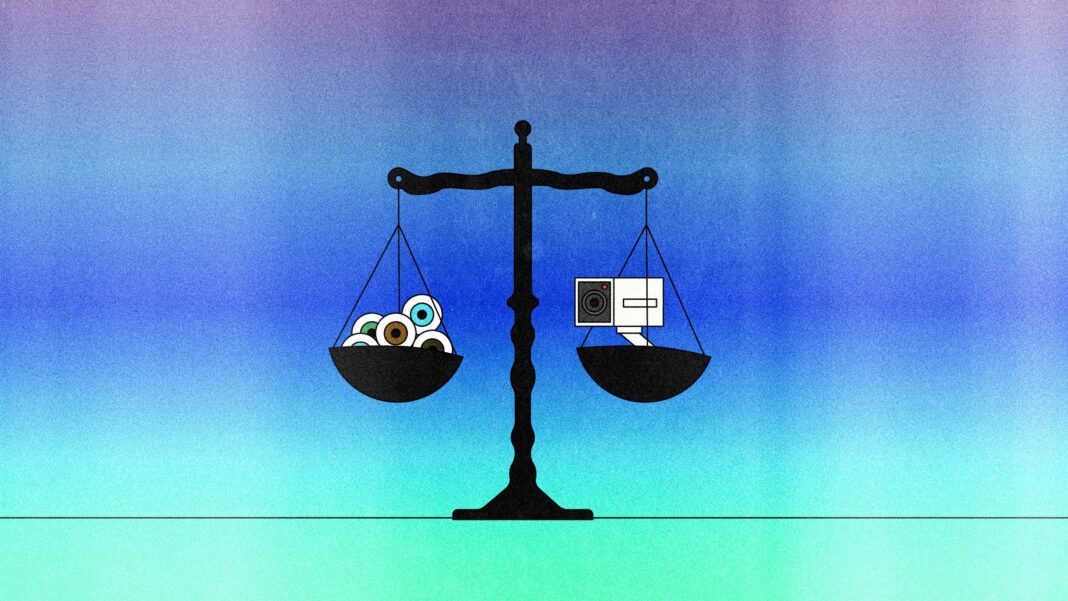

Tracking people’s activity on the web is the foundation of the online economy, dating back to the introduction of cookies in the 1990s. Data brokers from obscure companies collect intimate details about people’s online activity and can make predictions about individuals, like their menstrual cycles, or how often they pray, as well as collecting biometric data like facial scans. Cookies underpin online advertising and the business models of major companies like Facebook and Google, but today it’s common knowledge that data brokerages can do far more than advertise goods and services.

Online tracking can bolster attempts to commit fraud, trick people into buying products or disclosing personal information, and even share location data with law enforcement agencies or foreign governments. As an FTC document that proposes new rules puts it, that business model is “creating new forms and mechanisms of discrimination. ” Last month, the Federal Trade Commission voted 3-2 along party lines in favor of adopting an Advanced Notice of Proposed Rulemaking (ANPR) to consider drafting new rules to address unfair or deceptive forms of data collection or AI.

What’s unclear is where they will draw the line. The FTC is taking some data brokers to court, most recently Kochava , a company selling location data from places like abortion clinics and domestic violence survivor shelters that is suing the FTC , but those punishments come on a case-by-case basis. New rules can address systemic problems and tell businesses the kind of conduct that can lead to fines or land them in court.

The beginning of the rulemaking process marks the first major step to regulate AI by the commission since hiring staff dedicated to artificial intelligence a year ago. Any attempt to create new rules would require proving that an unfair or deceptive business practice is prevalent and meets a legal threshold for the definition of unfair. The FTC will accept public comment about the commercial surveillance and AI ANPR until October 21.

Should the commission decide new rules are necessary, it will release a notice for proposed rulemaking and again allow for a public comment period before making those rules final. FTC chair Lina Khan said new rules could help the commission impose fines on first-time violators; that the “middlemen of surveillance capitalism” are exacerbating imbalances of power in the US; and that, as Neil Richards put it, this “probably represents the most highly surveilled environment in the history of humanity. ” While the FTC considers whether new rules are necessary, the federal agency already has a fair amount of authority to protect consumers and to police unfair or deceptive business practices.

A paper published earlier this year in the University of Pennsylvania Law Review points to five ways the FTC is already empowered to take actions that go above the limits of federal anti-discrimination law. For example, while existing discrimination law may target the ultimate decider, like employers or lenders, the FTC can go after the sellers of software for aiding and abetting discrimination. Coauthor Andrew Selbst, an assistant professor at the University of California, Los Angeles School of Law, has also considered questions of liability in the age of AI and how to assess the impact of algorithms.

Selbst said that the ANPR is unprecedented in scope, but if you wanted an investigation to understand the scale of the problem before you attack, “this is exactly what it would look like. ” Despite the many questions in the ANPR, the need for a rule to end the sales of data to third parties received support from privacy and industry experts, as well as members of the public. Numerous opinions sent to the FTC and shared in the public forum called for comprehensive data privacy protections.

A set of tech accountability principles recently released by the White House also called data privacy protections essential to holding tech companies accountable. Karen Kornbluh leads the Digital Innovation and Democracy Initiative at the German Marshall Fund. She wants the FTC to adopt rules that allow young people to reset an algorithm or eliminate personal data that informs recommendations or predictions, so that, for example, a young girl who develops an eating disorder doesn’t continue to see videos about weight loss.

Kornbluh also believes that protecting the personal data of active US military service members may be important to keep foreign adversaries from exploiting a “national security loophole. ” All five commissioners hope comprehensive data privacy legislation that’s gaining bipartisan support in Congress becomes law, regardless of party affiliation, but that’s where agreement seems to end. Commissioner Phillips, who voted against beginning the ANPR process, expressed concern that the rulemaking effort will be used as an excuse to derail privacy legislation.

Commissioner Wilson, who also voted against the ANPR, expressed similar concern and suggested that new rules may not stand up to judicial review. On September 8, Commissioner Rebecca Slaughter said that until Congress passes a law, the FTC must do everything in its power to investigate and address unlawful behavior, and that the beginning of the effort to consider new rules should be seen as a “bookend of a long era of not appropriately exercising our rulemaking authorities. ” Slaughter first called for a rulemaking process three years ago after arriving at the conclusion that a case-by-case approach was insufficient to curb unfair practices.

She argues that the FTC won’t “clip the wings of congressional ambition” and that the worst outcome would be if Congress fails to pass comprehensive privacy legislation and the FTC fails to act. .

From: wired

URL: https://www.wired.com/story/ftc-ai-regulation/