AI 5 Moral Dilemmas That Self-Driving Cars Face Today Naveen Joshi Former Contributor Opinions expressed by Forbes Contributors are their own. New! Follow this author to improve your content experience. Got it! Aug 5, 2022, 07:30am EDT | New! Click on the conversation bubble to join the conversation Got it! Share to Facebook Share to Twitter Share to Linkedin Suppose you are driving within the speed limits on the road.

Out of nowhere, you see a child running across the road. You make the calculations and figure out that even if you applied the brakes real hard, you’d still hit the child. The only way to save him is to steer your vehicle either left or right.

However, there are a dozen bystanders on either side of the road. You’ll definitely end up ramming into them. So, what would you do in such a situation? Would you hit the child or the dozen bystanders? Or, if possible, disregard your own safety to prevent causing harm to either the child or the innocent people by the side of the road? This dilemma is already difficult for humans to answer in a morally-correct manner.

But what if autonomous or self-driving cars face such a moral dilemma? You have got to wonder, “What are the ethics of self-driving cars in such cases?” What will they do? Will they spare the child, the bystanders, and risk the driver’s life? Putting the Ethics of Self-Driving Cars to the Test In 2020, there were 35,766 fatal motor vehicle crashes in the United States. This led to the death of 38,824 people. Human error is cited as the leading cause of motor vehicle accidents.

Using autonomous vehicles can help prevent many of these accidents. However, one might argue that studies have shown that even with autonomous vehicles, accidents do happen. And as per a study, 99% of autonomous vehicle accidents are caused by human error.

But, if you look at the report in-depth, you’ll find out that the accident was caused by a human in another vehicle or a pedestrian. There have been two cases where the self-driving car’s system was at fault for the accident. So, it can be concluded that self-driving cars are more reliable than their manual counterparts.

That being said, there are still certain dilemmas that self-driving cars face today, which raises a question about the ethics of self-driving cars and makes us wonder if we really are ready for a driverless future . Let’s take a look. MORE FOR YOU Black Google Product Manager Stopped By Security Because They Didn’t Believe He Was An Employee Vendor Management Is The New Customer Management, And AI Is Transforming The Sector Already What Are The Ethical Boundaries Of Digital Life Forever? Five Moral Dilemmas Self-Driving Cars Face Today The moral dilemmas of self-driving cars are not new.

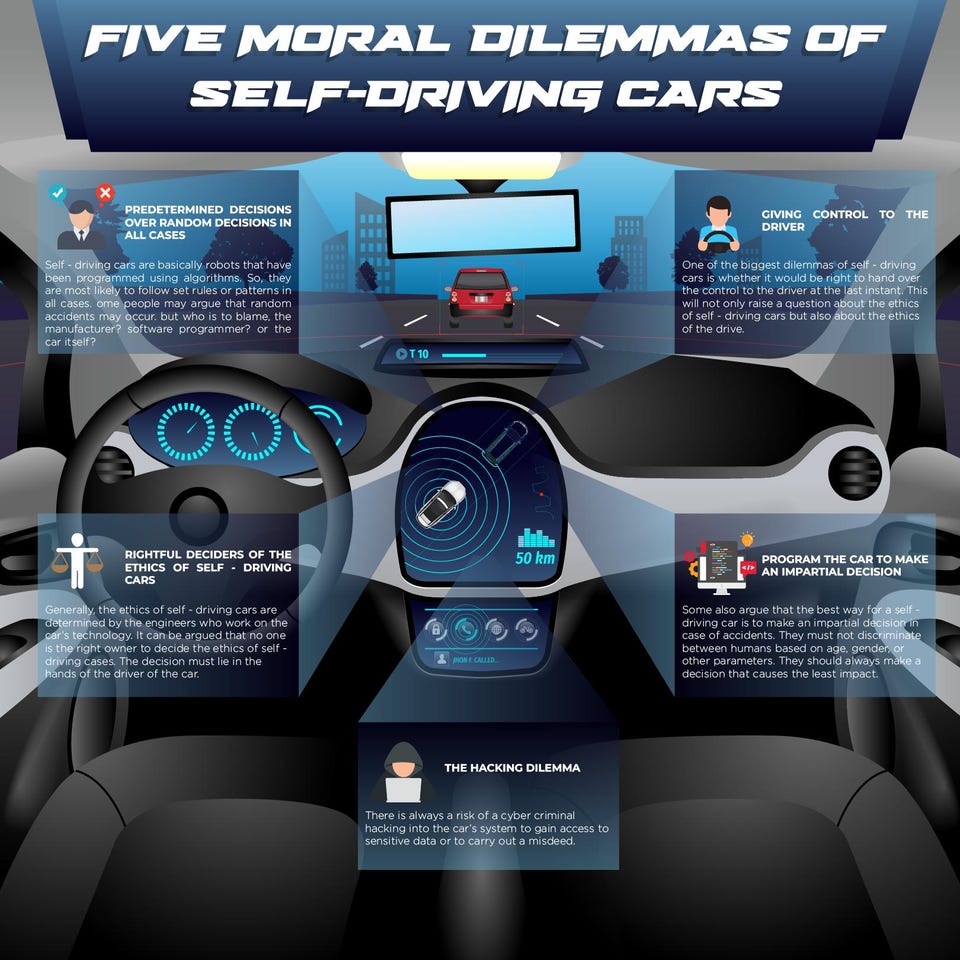

Since their inception, the ethics of self-driving cars have been a hot topic of debate. Here is a look at five moral dilemmas self-driving cars still face today. Predetermined Decisions Over Random Decisions in All Cases Self-driving cars are basically robots that have been programmed using algorithms.

So, they are most likely to follow set rules or patterns in all cases. However, that may not be the best course of action in all situations. Some people may argue, and right to some degree, that random accidents caused by humans are more justified than the predetermined death of a human or animal, in the case of an autonomous car.

So, in such cases, who is responsible for the death(s) caused? Is it the self-driving car manufacturer? Is it the software programmer? Or is it the car itself? There is no clear and right answer to this question. Thus, most people feel that accidents should happen naturally rather than letting a robot or software decide who’ll live and die. Giving Control to the Driver Tesla requires the driver to keep their hands on the steering and be attentive, even when the vehicle is running in fully autonomous mode.

The driver needs to be prepared to take over at any moment. But, even in such cases, if an accident does happen, who is responsible for the loss of life and property? Is it the autonomous car that led to the accident? Or is it the driver who couldn’t take the appropriate decision in the nick of time? Or is it the reckless pedestrian or driver in another car? Thus, one of the biggest dilemmas of self-driving cars is whether it would be right to hand over the control to the driver at the last instant. This will not only raise a question about the ethics of self-driving cars but also about the ethics of the driver.

Rightful Deciders of the Ethics of Self-Driving Cars Generally, the ethics of self-driving cars are determined by the engineers who work on the car’s technology. What they deem right or wrong determines how the car will act in certain situations like accidents. But, people argue about who is the right person or organization to decide the ethics of self-driving cars.

Is it the engineers who worked on the car technology? Is it the government of the country where the vehicle will be driven? It can be argued that no one is the right owner to decide the ethics of self-driving cases. The decision must lie in the hands of the driver of the car. Program the Car to Make an Impartial Decision Some also argue that the best way for a self-driving car is to make an impartial decision in case of accidents.

They must not discriminate between humans based on age, gender, or other parameters. They should always make a decision that causes the least impact. For example, if the car has to decide between saving a child or a group of sexagenarians, it should choose the sexagenarians as it will help save more lives.

Moreover, human lives should be given priority over animal lives. Germany has become the first country to adopt such rules regarding self-driving cars. It has prioritized human lives above all other factors.

The Hacking Dilemma There is always a risk of a cyber criminal hacking into the car’s system to gain access to sensitive data or to carry out a misdeed. For example, what if the autonomous car is hacked by a cybercriminal and commanded to carry out an accident to implicate the driver? In such cases, who is responsible for the accident and loss of lives? Is it the cybercriminal? Is it the driver? Is it the car manufacturer who couldn’t secure the car against such attacks? Due to such increased risks and unclear answers, self-driving cars, as a whole, may seem unethical to society. 5 Moral Dilemmas That Self Driving Cars Face Today Allerin Conclusion The ethics of self-driving cars has been constantly under debate.

The ethics have been defended and attacked to the extreme by supporters at each end of the spectrum. There is still no clear answer yet on how to solve the moral dilemmas self-driving cars face. As the debate around the topic intensifies due to the increasing adoption of self-driving cars, we hope that strict laws and regulations will be developed that can finally answer the questions in a correct, justifiable manner.

Follow me on Twitter or LinkedIn . Check out my website . Naveen Joshi Editorial Standards Print Reprints & Permissions.

From: forbes

URL: https://www.forbes.com/sites/naveenjoshi/2022/08/05/5-moral-dilemmas-that-self-driving-cars-face-today/