Forbes Leadership Leadership Strategy Artificial Intelligence Regulation, Why Experts Are Calling For Slowing Down AI: AI Series 4 Of 5 Nicole Serena Silver Contributor Opinions expressed by Forbes Contributors are their own. I cover the future of business and personal/professional development. Following Jun 6, 2023, 11:51pm EDT | Press play to listen to this article! Got it! Share to Facebook Share to Twitter Share to Linkedin AI regulations AFP via Getty Images “We do not know how to train systems to robustly behave well.

So far, no one knows how to train very powerful AI systems to be robustly helpful, honest, and harmless…The results of this could be catastrophic, either because AI systems strategically pursue dangerous goals, or because these systems make more innocent mistakes in high-stakes situations. ” As stated in a blog post by Anthropic. Unchecked AI development could lead to negative consequences, such as massive job displacement, exacerbation of inequality, loss of personal privacy, and weaponization.

Dan Hendrycks the Executive & Research Director, Center for AI Safety outlines three larger potential threats for humanity that experts are concerned about: Weaponization – AIs could be used in military technology and make conflict both more likely to happen and more deadly when it does. For example, if AIs are responsible for high-stakes military decisions before we’re able to master how AI systems work, they could cause extremely deadly conflicts. Selfishness and Evolutionary Forces – Selection pressures on AI development could lead to dangerous AIs with catastrophic consequences.

AI systems that are agentic, power-seeking, and selfish will have advantages over AI systems that lack these traits. Unchecked competitive pressures could lead to the development and deployment of these dangerous systems, as companies will fear that they’ll be outcompeted unless they comply. Misalignment – A final risk involves our inability to control these systems.

If we develop smarter-than-human systems without methods to robustly align them with the right objectives, this could cause an existential risk. They might be relatively apathetic toward humanity and make the environment uninhabitable for humans (just as humans do to less powerful species). Experts disagree about which of these scenarios is most likely.

There’s more consensus around risk sources, as many risk sources can increase multiple kinds of dangers. Other reasons for regulations include: Jobs – The rapid automation of jobs across various sectors could lead to widespread unemployment, as machines outperform humans in both manual and cognitive tasks. The gap between high-skilled and low-skilled workers would widen, further polarizing the job market and exacerbating inequality.

A well-thought-out plan of adaptation and upskilling is essential to lessen job displacement. Education – A failure to adapt education systems to the demands of the AI-driven workforce could result in a large segment of the population being left behind, with limited opportunities for gainful employment. Economy – Concentrated wealth and power among a few AI-driven corporations could lead to an unequal distribution of resources, further deepening social and economic divides.

Amplified Social Inequality – If AI systems are trained on biased data or reflect the values and biases of their creators, they can reproduce and exacerbate societal inequities. For example, AI-powered hiring systems that are trained on biased data may disproportionately disadvantage women or minority candidates. Regulation can help to ensure that AI systems are developed and implemented in ways that promote fairness and equity.

Cyber Attacks – AI systems can be used for malicious purposes, such as spreading disinformation or carrying out cyber attacks. Additionally, as AI systems become more advanced, there is a risk that they could be used to create autonomous weapons or other tools that could cause harm to individuals or society. Regulation can help to mitigate these risks and ensure that AI is developed and used in ways that promote human welfare and safety.

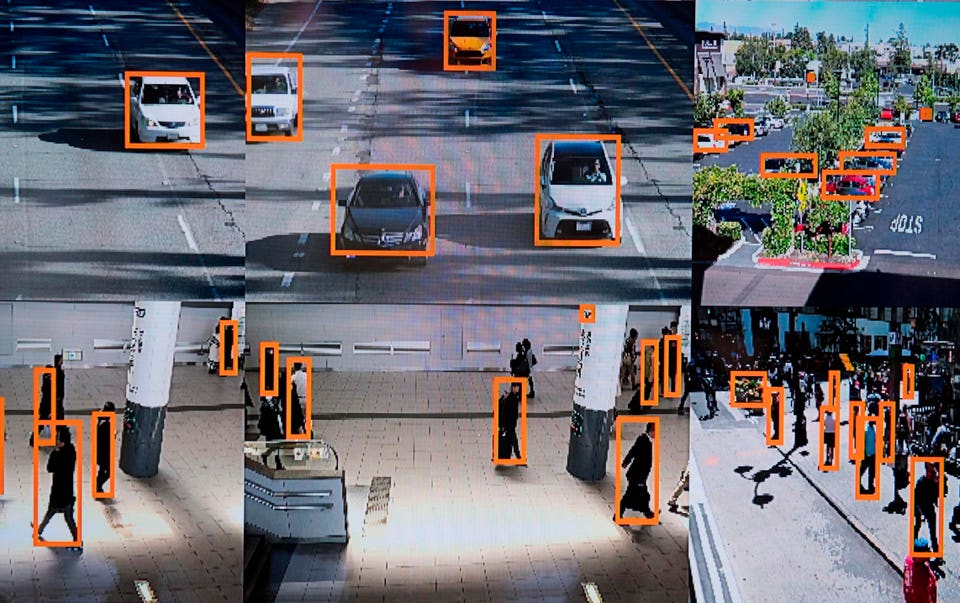

Intellectual Property – Intellectual-property laws have not caught up with the technological progress made in the field of AI research. Part of how AI works is that it cannibalized other people’s ideas. Privacy – As AI-driven technologies collect and analyze vast amounts of data, there is a risk that personal information could be misused or compromised, leading to potential harm to individuals or organizations.

There have been instances where data breaches have occurred due to security vulnerabilities in AI systems, highlighting the importance of robust cybersecurity measures in AI development and implementation. MORE FOR YOU The Future Of Education – Disruption Caused By AI And ChatGPT: Artificial Intelligence Series 3 Of 5 Microsoft Build 2023 Brings AI Tools To Where Work Is Done With Copilots And Plugins Google Emphasizes Android AI Large Screens Pixel And Its New Pixel Fold Currently, AI is hitting the market with incredible speed and very little governance, especially with startups that don’t have investor oversight. For example, the company Lensa image editing app exploded onto the market with people creating avatars of themselves based on selfies they uploaded.

Lensa’s generative images created racial biases and nude images (females only generated from headshots). There were also concerns raised over the legal right to merge images of artists’ original creations to create AI avatars. The legality of it all is blurred and an area where regulations have not yet caught up.

There is potential damage caused to individuals (especially if someone else uses an individual’s headshots to create nudes of them), and potential copyright issues, and there is also the potential for legal and reputational damage that could hurt the company. It is these types of topics that need to be addressed to protect everyone from individual users to the company itself. The ethical and responsible use of AI is a hot topic and I would imagine will continue to be.

As AI becomes more advanced and ubiquitous, there are increasing calls for oversight and governance of its development and use. This raises important questions about who should be responsible for overseeing AI, what regulations should be put in place, and how to balance innovation with the potential risks and ethical considerations of AI. One of the challenges with governing AI is that it is a rapidly evolving technology, making it difficult for regulatory bodies to keep up.

Additionally, AI is being developed and used by a wide range of organizations, from small startups to large tech companies, creating a complex ecosystem of stakeholders. We are all a part of laying the foundation for how AI enters society. One of the better things you can do is educate yourself on the benefits and downfalls.

If you have concerns or want to embrace AI in specific ways send messages to public officials, companies, and the board of companies to address your thoughts in brevity. “You are not a drop in the ocean, you are the ocean in a drop. ” – Rumi Your voice matters.

To learn more about the benefits and downfalls, and disruption of AI, follow this article series which will include: AI History And Future AI’s Influence On Jobs And The Workforce The Future Of Education – Disruption Caused By AI And ChatGPT AI Regulation, Why Experts Are Calling For Slowing Down Artificial Intelligence The Future Of AI: Dystopian And Utopian Projections Follow me on Twitter or LinkedIn . Check out my website or some of my other work here . Nicole Serena Silver Editorial Standards Print Reprints & Permissions.

From: forbes

URL: https://www.forbes.com/sites/nicolesilver/2023/06/06/artificial-intelligence-regulation-why-experts-are-calling-for-slowing-down-ai-ai-series-4-of-5/