AMD MI300 In the fiercely competitive high-performance computing (HPC) and artificial intelligence (AI) sectors, AMD made a substantial leap forward with the release of its new MI300A and MI300A accelerators. The new accelerators show AMD’s aggressive pursuit of growing its position in the generative AI market and provide the first significant threat to NVIDIA’s dominance. AMD’s MI300X Based AMD’s advanced CDNA 3 architecture, its new MI300X marks a from its predecessors, delivering enhanced performance and efficiency vital for handling complex AI and high-performance computing (HPC) tasks.

MI300X The MI300X’s architecture is designed to support a variety of data formats and is especially adept at handling sparse matrices—crucial for AI workloads—thereby optimizing the performance for machine learning tasks that are becoming increasingly prevalent across various industries. The MI300X sets itself apart with an impressive 192 GB of High Bandwidth Memory (HBM), facilitating a peak bandwidth of 5. 3 TB/s.

This formidable memory capability is not just about capacity; it’s essential for managing the large and intricate datasets typical in AI applications, particularly in training expansive large language models (LLMs). Its ability to process and analyze these vast data sets quickly and efficiently is critical for advancements in AI, and the MI300X is poised to deliver on this front. The MI300X demonstrates significant strengths in inference benchmarks, leveraging its larger memory capacity for higher throughput in scenarios like the Bloom benchmark.

It also exhibits a 40% latency advantage in the LLAMA 2-70B inference benchmark over the H100, which is attributed to its superior bandwidth. MI300X Performance While there are clear areas where the MI300x’s training performance can be improved—currently achieving less than 30% of the theoretical FLOPS—there is optimism within the industry. With the anticipated software optimizations and increasing support from software frameworks such as OpenAI Triton and PyTorch 2.

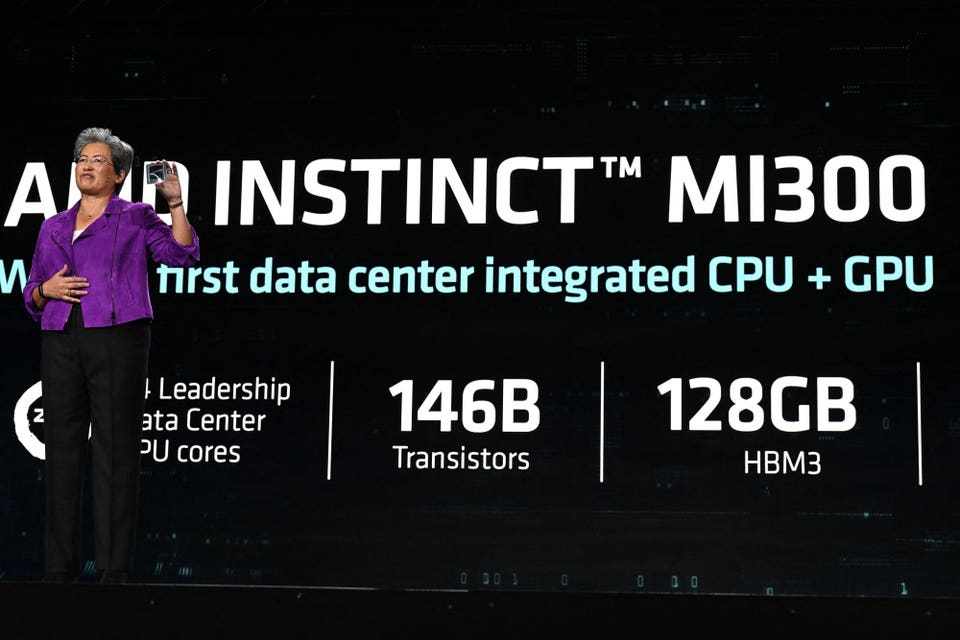

0, the MI300X is expected to become increasingly competitive. These enhancements, coupled with AMD’s strategic partnerships and robust design, suggest that the MI300X is not just a product of today but a building block for the future of AI and HPC technology. AMD’s MI300A While the MI300X is a stand-alone accelerator, the AMD Instinct MI300A is an accelerated processing unit (APU), bridging the gap between high-performance CPUs and GPUs within a single package.

This hybrid APU is part of AMD’s “Antares” Instinct MI300 family, meticulously crafted for high-performance computing (HPC) and AI applications. MI300A Accelerator Integrating AMD’s “Zen 4” CPU cores with CDNA 3 GPU cores, the MI300A achieves a level of data processing efficiency that sets a new benchmark in the industry. The design allows the CPU and GPU to directly access a shared pool of 128 GB of cutting-edge HBM3 memory, thereby reducing data transfer times and increasing overall computational throughput, a critical advantage for complex workloads in scientific research, machine learning, and data analytics.

The MI300A arrives with 128 GB of HBM3 memory, providing a substantial bandwidth of 5. 3 TB/s to handle the intricate datasets now standard in HPC and AI operations. This high bandwidth and memory capacity are pivotal for smoothly handling and processing the vast datasets involved in training AI models.

The MI300A also delivers an impressive efficiency story. Its integration of CPU and GPU cores onto a single package enhances performance and significantly reduces the power draw, echoing AMD’s 30×25 initiative aiming for a 30x improvement in energy efficiency by 2025. Analyst’s Take AMD is mounting its most significant challenge to NVIDIA’s dominance in the generative AI space.

With cloud providers and enterprises increasingly eager to diversify their technological portfolios, AMD’s new offerings are well-positioned to disrupt the current market dynamics. Market response to AMD’s announcement has been telling. The adoption of the new offerings by major cloud providers like Microsoft and Oracle, along with enterprises such as Databricks (MosaicML), points to a broader industry trend of seeking out competitive alternatives to NVIDIA’s solutions.

AMD’s approach to design and performance, coupled with the potential for a more competitive pricing structure, could entice the significant AI market to reconsider their hardware allegiances. The strategic alliances forming as part of AMD’s broader market strategy is also worth noting. The collaboration with Broadcom to support infinity fabric on their PCIe switches is particularly noteworthy.

This alliance directly challenges NVIDIA, setting the stage for a more diverse and competitive high-performance networking landscape. While AMD’s MI300x and MI300a are technically impressive, the true test will be their deployment and the real-world gains they can deliver to the end users. As they stand, these accelerators are a testament to AMD’s innovative spirit and its commitment to pushing the boundaries of what’s possible in AI and HPC.

The industry will be watching closely to see if these products can fulfill AMD’s promise and help reshape the future of computing. Whether these advances will translate into a significant market share gain for AMD remains to be seen, but the potential for disruption is unmistakable. With the groundwork laid for a substantial shift, AMD’s next moves will be crucial in determining the future dynamics of the AI hardware space.

.

From: forbes

URL: https://www.forbes.com/sites/stevemcdowell/2023/12/10/amd-disrupts-ai-accelerator-market-with-new-mi300-offerings/