Join leaders in San Francisco on January 10 for an exclusive night of networking, insights, and conversation. Request an invite here. In the near future, an AI assistant will make itself at home inside your ears, whispering guidance as you go about your daily routine.

It will be an active participant in all aspects of your life, providing useful information as you browse the aisles in crowded stores, take your kids to see the pediatrician — even when you grab a quick snack from a cupboard in the privacy of your own home. It will mediate all of your experiences, including your social interactions with friends, relatives, coworkers and strangers. Of course, the word “mediate” is a euphemism for allowing an AI to influence what you do, say, think and feel.

Many people will find this notion creepy, and yet as a society we will accept this technology into our lives, allowing ourselves to be continuously coached by friendly voices that inform us and guide us with such skill that we will soon wonder how we ever lived without the real-time assistance. When I use the phrase “AI assistant,” most people think of old-school tools like Siri or Alexa that allow you to make simple requests through verbal commands. This is not the right mental model.

That’s because next-generation assistants will include a new ingredient that changes everything – context awareness. This additional capability will allow these systems to respond not just to what you say, but to the sights and sounds that you are currently experiencing all around you , captured by cameras and microphones on AI-powered devices that you will wear on your body. The AI Impact Tour Getting to an AI Governance Blueprint – Request an invite for the Jan 10 event.

Whether you’re looking forward to it or not, context-aware AI assistants will hit society in 2024, and they will significantly change our world within just a few years, unleashing a flood of powerful capabilities along with a torrent of new risks to personal privacy and human agency. On the positive side, these assistants will provide valuable information everywhere you go, precisely coordinated with whatever you’re doing, saying or looking at. The guidance will be delivered so smoothly and naturally, it will feel like a superpower — a voice in your head that knows everything, from the specifications of products in a store window, to the names of plants you pass on a hike, to the best dish you can make with the scattered ingredients in your refrigerator.

On the negative side, this ever-present voice could be highly persuasive — even manipulative — as it assists you through your daily activities, especially if corporations use these trusted assistants to deploy targeted conversational advertising . The risk of AI manipulation can be mitigated, but it requires policymakers to focus on this critical issue, which thus far has been largely ignored. Of course, regulators have not had much time — the technology that makes context-aware assistants viable for mainstream use has only been available for less than a year.

The technology is multi-modal large language models and it is a new class of LLMs that can accept as input not just text prompts, but also images, audio and video. This is a major advancement, for multi-modal models have suddenly given AI systems their own eyes and ears and they will use these sensory organs to assess the world around us as they give guidance in real-time. The first mainstream multi-modal model was ChatGPT-4, which was released by OpenAI in March 2023.

The most recent major entry into this space was Google’s Gemini LLM announced just a few weeks ago. The most interesting entry (to me personally) is the multi-modal LLM from Meta called AnyMAL that also takes in motion cues. This model goes beyond eyes and ears, adding a vestibular sense of movement.

This could be used to create an AI assistant that doesn’t just see and hear everything you experience — it even considers your physical state of motion. With this AI technology now available for consumer use, companies are rushing to build them into systems that can guide you through your daily interactions. This means putting a camera, microphone and motion sensors on your body in a way that can feed the AI model and allow it to provide context-aware assistance throughout your life.

The most natural place to put these sensors is in glasses, because that ensures cameras are looking in the direction of a person’s gaze. Stereo microphones on eyewear (or earbuds) can also capture the soundscape with spatial fidelity, allowing the AI to know the direction that sounds are coming from — like barking dogs, honking cars and crying kids. In my opinion, the company that is currently leading the way to products in this space is Meta.

Two months ago they began selling a new version of their Ray-Ban smart glasses that was configured to support advanced AI models. The big question I’ve been tracking is when they would roll out the software needed to provide context-aware AI assistance. That’s no longer an unknown — on December 12 they began providing early access to the AI features which include remarkable capabilities.

In the release video, Mark Zuckerberg asked the AI assistant to suggest a pair of pants that would match a shirt he was looking at. It replied with skilled suggestions. Similar guidance could be provided while cooking, shopping, traveling — and of course socializing.

And, the assistance will be context aware. For example reminding you to buy dog food when you walk past a pet store. Another high-profile company that entered this space is Humane, which developed a wearable pin with cameras and microphones.

Their device starts shipping in early 2024 and will likely capture the imagination of hardcore tech enthusiasts. That said, I personally believe that glasses-worn sensors are more effective than body-worn sensors because they detect the direction a user is looking, and they can also add visual elements to line of sight. These elements are simple overlays today, but over the next five years they will become rich and immersive mixed reality experiences.

Regardless of whether these context-aware AI assistants are enabled by sensored glasses, earbuds or pins, they will become widely adopted in the next few years. That’s because they will offer powerful features from real-time translation of foreign languages to historical content. But most significantly, these devices will provide real-time assistance during social interactions, reminding us of the names of coworkers we meet on the street, suggesting funny things to say during lulls in conversations, or even warning us when the person we’re talking to is getting annoyed or bored based on subtle facial or vocal cues (down to micro-expressions that are not perceptible to humans but easily detectable by AI).

Yes, whispering AI assistants will make everyone seem more charming, more intelligent, more socially aware and potentially more persuasive as they coach us in real time. And, it will become an arms race, with assistants working to give us an edge while protecting us from the persuasion of others. As a lifetime researcher into the impacts of AI and mixed reality , I’ve been worried about this danger for decades.

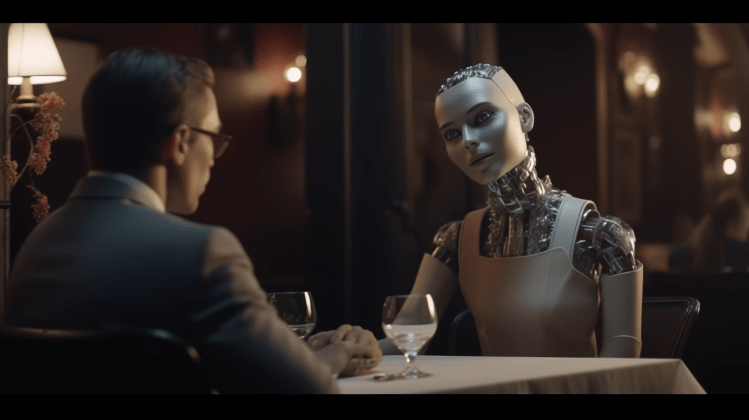

To raise awareness, a few years ago I published a short story entitled Carbon Dating about a fictional AI that whispers advice in people’s ears. In the story, an elderly couple has their first date, neither saying anything that’s not coached by AI. It might as well be the courting ritual of two digital assistants, not two humans, and yet this ironic scenario may soon become commonplace.

To help the public and policymakers appreciate the risks, Carbon Dating was recently turned into Metaverse 2030 by the UK’s Office of Data Protection Authority (ODPA). Of course, the biggest risks are not AI assistants butting in when we chat with friends, family and romantic interests. The biggest risks are how corporate or government entities could inject their own agenda, enabling powerful forms of conversational influence that target us with customized content generated by AI to maximize its impact on each individual .

To educate the public about these manipulative risks, the Responsible Metaverse Alliance recently released Privacy Lost . For many people, the idea of allowing AI assistants to whisper in their ears is a creepy scenario they intend to avoid. The problem is, once a significant percentage of users are being coached by powerful AI tools, those of us who reject the features will be at a disadvantage.

In fact, AI coaching will likely become part of the basic social norms of society, with everyone you meet expecting that you’re being fed information about them in real-time as you hold a conversation. It could become rude to ask someone what they do for a living or where they grew up, because that information will simply appear in your glasses or be whispered in your ears. And, when you say something clever or insightful, nobody will know if you came up with it yourself or if you’re just parroting the AI assistant in your head.

The fact is, we are headed towards a new social order in which we’re not just influenced by AI, but effectively augmented in our mental and social capabilities by AI tools provided by corporations. I call this technology trend “augmented mentality,” and while I believe it’s inevitable, I thought we had more time before we would have AI products fully capable of guiding our daily thoughts and behaviors. But with recent advancements like context-aware LLMs, there are no longer technical barriers.

This is coming, and it will likely lead to an arms race in which the titans of big tech battle for bragging rights on who can pump the most powerful AI guidance into your eyes and ears. And of course, this corporate push could create a dangerous digital divide between those who can afford intelligence enhancing tools and those who cannot. Or worse, those who can’t afford a subscription fee could be pressured to accept sponsored ads delivered through aggressive AI-powered conversational influence .

We are about to live in a world where corporations can literally put voices in our heads that influence our actions and opinions. This is the AI manipulation problem — and it is so worrisome. We urgently need aggressive regulation of AI systems that “close the loop” around individual users in real-time, sensing our personal actions while imparting custom influence.

Unfortunately, the recent Executive Order on AI from the White House did not address this issue, while the EU’s recent AI ACT only touched on it tangentially. And yet, consumer products designed to guide us throughout our lives are about to flood the market. As we dive into 2024, I sincerely hope that policymakers around the world shift their focus to the unique dangers of AI-powered conversational influence , especially when delivered by context-aware assistants.

If they address these issues thoughtfully, consumers can have the benefits of AI guidance without it driving society down a dangerous path. The time to act is now. Louis Rosenberg is a pioneering researcher in the fields of AI and augmented reality.

He is known for founding Immersion Corporation (IMMR: Nasdaq) and Unanimous AI, and for developing the first mixed reality system at Air Force Research Laboratory. His new book, Our Next Reality , is now available for preorder from Hachette. Welcome to the VentureBeat community! DataDecisionMakers is where experts, including the technical people doing data work, can share data-related insights and innovation.

If you want to read about cutting-edge ideas and up-to-date information, best practices, and the future of data and data tech, join us at DataDecisionMakers. You might even consider contributing an article of your own! Read More From DataDecisionMakers.

From: venturebeat

URL: https://venturebeat.com/ai/2024-will-be-the-year-of-augmented-mentality/